Note: This post contains ending spoilers of Chobits.

Chobits was just one of the many shows that talked about the relationship between AI and people. Towards the ending of the story, Hideki (the main characer) gets to talk with Freya, sister of Chii. Chii is the titular “Chobit”, an advanced robot AI which according to the legend, have the added capability to feel emotions.

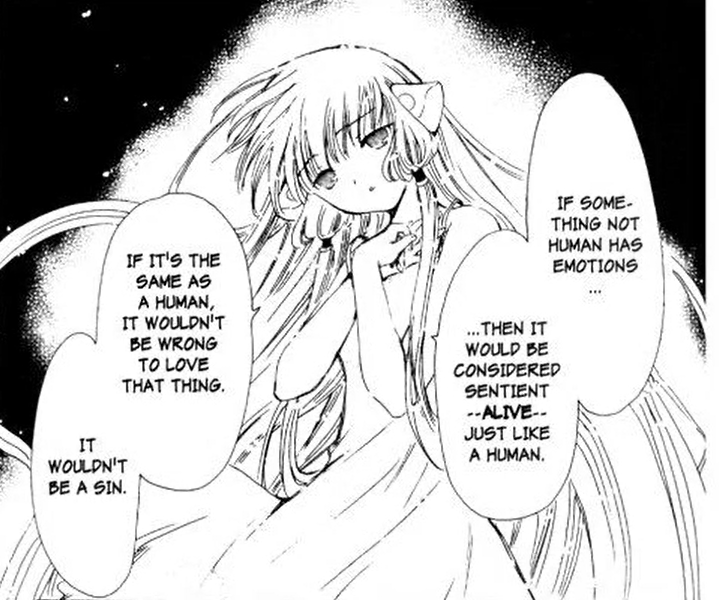

To the shock of everyone, Freya had something different to say about that:

I remember reading this years ago, and while Chobits for me was “kind of ok” as a story, this conversation still stuck to me after all these years.

I expected that Chii, the love interest of the story, would’ve been “like a human”, at least to justify Hideki’s love for her. But now Hideki has to make sense of his love for her, for something who is not someone.

Now, saying that a robot has gained conscience in a story is fine to accept. It’s not real life and much weirder things happen in fiction. There must have been a feeling even in the past century that creating life through technology was the next step for humanity. Stories like Chobits that talk about sentient machines predate it by decades, but I chose Chobits because 1. This is an anime/manga/games blog and 2. it asks whether it matters if these robots are sentient or not.

Of course, with everything that Hideki has gone through, he’s not going to leave Chii back.

Now, what matters to me isn’t that he finds a valid explanation on why he wants to keep a relationship with an AI, but rather that he’s got one. Even if his expectations of Chii being unique were cut away, he still decided to stay with her because the emotions he felt with her were still true to him.

Fictional AI and Real AI

AI has changed in the last years. Not just that, we haven’t yet touched the ceiling of what we can achieve with our current experience and resources. Before being fired (and then re-hired) the CEO of OpenAI Sam Altman said at a conference that recent technical advancements would “push the veil of ignorance back and the frontier of discovery forward.” One of the Chief Scientist in OpenAI also said that AI might be getting “slightly conscious“, which could pass as a random joke tweet if it wasn’t that he started burning effiges representing “human-unaligned AI” and becoming a sort of spiritual leader at the company.

Whether these people, or anyone at all for that matter, believe that AI might sooner or later become conscious is not really the question at this point though. That’s because some people are already starting to accept AI in their daily lives as help, and even as partners.

Some people are already accepting of AI while still acknowledging they’re not people. With time though, people and researches are going to be very curious about how to integrate AI into society with a body of their own. When that happens, AIs are still going to be machines and software bundled together, and they may still feel no emotions and have no consciousness, but what matters is that they’re going to be there.

What Makes a Human

We know, at least superficially, that the current generation of Generative AI can make up sentences and images through a mix of training with Terabytes of inputs, weeks of calculations, and complex mathematical models to support all of this. This was achieved by replicating how humans learn too, with our sensorial experiences on the world around us, and years of elaborating said information through a complex net of neurons and connections we call our brains.

We’re talking about a system that merely imitates the real thing, all with the goal to acquire certain behaviours. Writing for example is a very human behaviour, and so is creating images. Talking especially can be considered one of the most human behaviour, since it’s heavily connected to the number 1 human behaviour: communication. Everything about AI is fabricated around achieving these key elements of humanity, just like learning to talk a language doesn’t mean knowing all of that language’s vocabulary and formalities. It’s all about giving the perception of ability in the right places.

If it quacks like a duck…

If researchers were to create robot AIs that behave as closely to people as possible, these AIs are going to do exactly that. Give them a punch and they’ll recoil, even if they wouldn’t feel any of pain. Tell them that AIs are inferior to humans, and they’d react as a person would if they were told the same. Tell them to think for themselves, and they’re going to build their own structures with their robot arms and form their own communities with representatives and such. Not because they need them, not because it’s right, but because that’s what humans would do – and that’s what they were programmed to mimic.

At this point, it isn’t really a matter on whether they feel emotions and have a sense of self. What actually matters is how much power they’ve been given and what they’re going to do with it. If an AI is the basis of a business, then it’s going to influence predictions through its own biases. That’s already something that happens (we talk about bias in the model in this case). The current fear is that when AI is going to become part of society, then they also will become inputs in the calculations of models, and how can you teach a machine to go against its own benefits if you also programmed them to behave like humans? The same can be said if AI is going to be used in governments and other social environments.

Conclusion

In the past it was fun to entertain the idea of robots living in society with humans, but now that everyone is noticing how AI is rapidly growing, it does feel that it might be more than fiction. Of course, it could also be a bubble that’s going to burst sooner or later due to the high costs of maintenance and research, so only time will tell. But if we were to see robots in our daily lives, in schools and at the store, how would you treat them? Would you still fall into your human tendencies of cordiality or take a proactive stand and try to integrate them into society or, viceversa, fight against their inclusion?

Hi Sephy, shidi here. Robot and AI scifi is actually one of my pocket interests, so I’ve read a lot of media about them. I wasn’t super familiar with the details of Chobits’ ending, so highlighting Freya and Hideki’s conversation made for a good refresher.

Off the top of my head, there’s a lot of topics to dig into and I’m not super certain where or whether to start, but if you want to talk more about a particular topic, feel free to add me on Discord (esoterica) and I’ll write some more detailed stuff I guess. Or if you just want some other robot scifi media recs I got those too

LikeLiked by 1 person

Or msg me on twitter whatever works

LikeLiked by 1 person